Image Perception and Frame Rates

A while back, I wrote a little article on screen resolution which hopefully enlightened readers on what it actually means, and demystified the world behind the jargon, which is so frequently exploited by TV salesmen to sell you expensive TVs. Part of the inspiration for that article was this XKCD comic, and recently there has been a lot of talk in the movie industry about frame rates, which leads me to explaining the mouseover text in that comic.

The complaint from tech-heads is often two-pronged, first they complain about resolution. This complaint is usually leveled at TV, in particular the fuss that is made about HDTV, which is not actually particularly high (see above-referenced article). The second concerns frame rates. There are many standards in existence these days, but only two relevant ones – 24p, and 30p.

Very old TVs (and some new ones) use what is called interlaced scan, which is where the odd and even horizontal lines of the screen come on and off in an alternating pattern. Anything new (read: digital) would be in progressive scan, where the entire image changes with the frame rate. The “p” in the above standards refers to progressive scan. 24p simply means progressive scan at 24 frames per second.

Standard HDTV is usually broadcast in 30p. Generally 1080/30p, which means 1080 lines of vertical resolution at a progressive scan of 30 frames per second. The complaint is that the industry standard for feature films is 24 frames per second. It has been this way for a very long time. I’m not sure why they chose 24 frames per second, but I have a feeling it had something to do with the (primitive) technology of the time. It is certainly possible for our eyes to distinguish between 30 and 24 frames per second and it is often suggested that, since TV is 30 frames per second, it has “trained” our eyes to associate the higher frame rate with TV, and thus movies are condemned to staying at 24p otherwise our brains would automatically think that they are “TV”. James Cameron recently made ripples in the film making industry by suggesting that he might try shooting at 60fps.

After thinking about this for a long time, I think I have figured out the problem and it doesn’t have anything to do with the frame rate per se. The key to understanding what’s going on here is understanding the oft-confused difference between frame rate and shutter speed. We begin (as I did in the article on resolution) by examining the most fundamental piece of hardware in our equation – the human eye. In the previous article, I established a reasonable theoretical maximum resolution for certain sizes of screens, based on the maximum acuity of the eye and the anticipated viewing distances between the eye and the screens. This, however, is slightly more complicated.

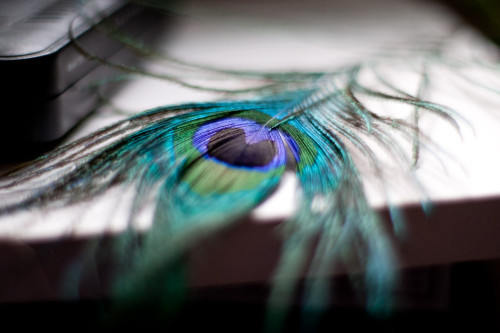

The human eye perceives things in a certain way. The maximum aperture of our eyes is roughly equivalent to f/2 on a camera lens, and the resolution in the center is significantly higher than towards the edges. This is part of the reason that pictures like the one above, taken with a wide-aperture lens on a professional SLR camera are generally more appealing than photos taken on cheap point-and-shoot compact cameras where everything seems to be in focus – the former is much closer to what our eyes actually see. Selective focus using what’s called “shallow depth of field” is more than just a simple trick of a photographer to draw your attention to a certain part of the picture – it simulates what our eyes actually do when we draw our attention to a certain portion of our field of view.

Frame rates are a trickier thing to figure out than the relative aperture size of the human eye however. The world (if physics is to be believed) happens as a continuous movement – one could say at an “infinite” frame rate. In high school, we once conducted an experiment where we set up a camera shutter so we could look through it and try to recognize an image. We found that most of us could recognize images when the shutter speed was as high as 1/250 of a second, but beyond that it was too short a time. Nobody could recognize anything past 1/500 although there are likely a handful of people in the world who can. However, many experiments have been done where subjects were shown filmed sequences at over 100 frames per second, and they described it as “artificial” as well as sometimes making them feel ill.

I think the real problem here, is that when motion film is shot at say 100fps, this necessarily means that each of the individual frames is taken at 1/100 of a second or faster. This takes away a lot of blur. In the same way that our eyes naturally blur out most of an image if we aren’t looking directly at it, we also blur out a lot of movement when we aren’t directly tracking it. Traditionally, 24fps film is shot at what is called 180 degree shutter. (see diagram below)

This means that the shutter speed is usually 48fps. Motion picture lenses are usually very wide aperture (and very expensive) to get that selective focus “look” and the exposure is controlled using a combination of extensive lighting setups and neutral density filters (sunglasses for the lenses). Believe it or not, almost all film is shot at this shutter speed. There are some notable exceptions – in Chariots of Fire, for the scene where they’re running along the beach, they painstakingly cut out every second frame and duplicated the frame that was not cut, to give the effect of 1/48 shutter at 12 frames per second. In the more recent film Gladiator, for the fight scenes, the shutter speed was approximately doubled, but the frame rate was kept the same (1/100 shutter at 24 frames per second). The interesting thing to note here, is that both effects are noticeable, even if they weren’t immediately apparent.

The point here is that, for relative motion, we perceive things with a blur. Changing that blur in any way will produce a noticeable effect. HDTV in 30 frames per second was probably shot with a shutter speed of 1/60 of a second, which is significantly faster than 1/48. In addition, TV cameras have relatively small image sensors, usually 2/3″ format, which has about 1/17 the area of a full-frame sensor, which translates to having much less ability to offer selective focus in the way a proper motion picture camera can (part of the reason for this, is that with live TV, it makes it easier to keep everything in focus, whereas in motion picture shooting, everything is meticulously planned, including the exact point of focus, and it usually takes 3 people to operate 1 camera, whereas in TV each camera is expected to only require one person to operate).

So really, the problem has nothing to do with the frame rate itself. Our eyes are clearly OK with perceiving an infinitely-high frame rate (a.k.a. the real world) but the shutter speed at which the film is recorded must, in most cases, simulate our ordinary perception of the world, including blur, otherwise our minds will reject it as “fake”. The practical problem with this, is how to make it possible to shoot at frame rates around 1/40 and 1/50 of a second, yet project at frame rates where each frame may only be visible for 1/60 of a second or less. One promising solution that has been tried is software interpolation (basically “guessing” the intermediate frames) which seems like a pretty good idea. However, the software is not sufficiently advanced to guess very well – typically hard edges and contrasts are what gets interpolated (because it’s easy for software to “see” these things), but the key to achieving the proper effect is to also interpolate the blur.

As long as motion is in any way mechanically-captured, it will be impossible to project film at a higher frame rate than the reciprocal of the shutter speed (e.g. 60fps for 1/60 of a second). However, there is promise in the area of electronic motion picture capture, although the bandwidth capacity for image sensors still has to increase significantly for this to be possible (as well as processing power at the point of capture, not to mention the bandwidth increases necessary for higher resolutions, like I mentioned in my other article). Still, the rate at which technology is advancing in this area is breathtaking to say the least, and I feel confident that it is entirely conceivable within our lifetimes that we will see maximal resolution films in very high frame rates that still look “natural” with proper out-of-focus blur AND motion blur.

But real life projects at infinite framerate and (assuming you’re looking through a homogenous fluid like air or water) infinite crispness, so why is blur necessary in film? The eye’s hardware “converts” this to lower framerate and less crisp information, so I have a hard time imagining feeling uncomfortable watching high-framerate high-definition film. On the contrary, watching a 24fps film while the camera pans is often pretty unpleasant.

good questions…

the reason this happens is because of focus. When an object moves in real life, and we track it with our eyes, other stuff goes out of focus automatically and blurs automatically because we’re changing our visual frame of reference. When this happens on a screen, everything is on the same plane of focus – and THAT is what pisses our eyes off. It can’t decouple the moving (but relatively still) object from the still (but moving) background. So if that background is sharp and perfectly crisp it messes with some part of our visual cortex. even 3D doesn’t get around this problem, and this is one of the reasons that “good” 3D films take place within a reasonably narrow band of the screen’s distance from the viewer, otherwise our eyes would try to focus on faraway objects which are actually focused much closer…

panning is always going to be a problem because our eyes don’t pan smoothly anyway. think about how your eyes move when you read quickly. they jump from one fixed point to another – when you speed read, you just try to jump further each time. our eyes are hopeless at seeing stuff that’s actually moving relative to where they are pointing. when your head “pans” across an expansive landscape, your eyes do the same thing, otherwise you would just see a blur, even at relatively low speeds. Ironically enough, an infinite (or just very high) frame rate pan on a movie screen would solve this problem (but possibly give us motion sickness), but if there’s any movement relative to the scene being panned, then it will have to be blurry.

Some rides at amusement parks actually do this, you sit in a thing that moves a little while some kind of panoramic screen projects a scene at a high frame rate (typically 60fps, but it may be higher now). But even those scenes are very carefully planned so that there’s not much relative movement to the background, or if there is, the background is blue sky, or outer space.